Every CRO expert knows that data is the key to our experiments. But too often we stop at the data we’ve collected and expect it to communicate the whole story behind our tests and improvements – without considering relevant data before the test, and afterwards. In my experience, this is the critical mistake that leaves our stakeholders underwhelmed and under-appreciative of the work we do.

In today’s article, we’ll review how data can inform the three stages of any good CRO test – before, during, and after. We’ll discover what to look for in creating your CRO tests, what data to measure during them, what to report on afterwards to show value in your experiments, and how to implement the best ones across a website.

Before the “Before You Begin” Part

It is absolutely crucial for any digital marketer to have faith in your data. Many times, the systems we’re handed are imperfect, whether they’re set up for specific results that we no longer agree with or that are no longer relevant, or they’re set up incorrectly. These systems – be they your web analytics like Google Analytics, your customer databases in email and automation platforms, your CRM of choice, or even your testing platforms – are necessary for testing.

The adage “good data in means good data out” is true. But don’t be crippled by your system not being perfect. All things being equal, having some data that you can rely on can be enough to test and make improvements on your websites.

As analytics expert Avinash Kaushik recently said on his Occam’s Razor blog, “The very best analysts are comfortable operating with ambiguity and incompleteness, while all others chase perfection in implementation.” Words to live by, friends. Work where you have clarity and confidence, and solve for perfection another day.

The one caveat that I’ll add to that is to make sure you have your data sources set up to segment information correctly. Data segmentation is key to nearly everything you will do as a digital marketer. Most important for testing is to have access to the following segments:

- Pages or areas of your website that you want to test

- Audiences that you want to include or exclude in your tests

- Activities and actions that signify a change in status for a target user on your website (such as marketing-qualified lead and sales-qualified lead actions, lead scoring, etc.)

- Channels that your web traffic comes through

Depending on your business, there’s likely more. But making sure you’ve got the basics covered will help you in the long run – not just in the testing, but in proving your results worthwhile as well.

Another question you’ll need to answer is exactly what and when to test. You’ll want to run tests with the potential to gain statistically significant results, so ad campaigns, high traffic emails, and other things that generate a high amount of traffic are your best bet.

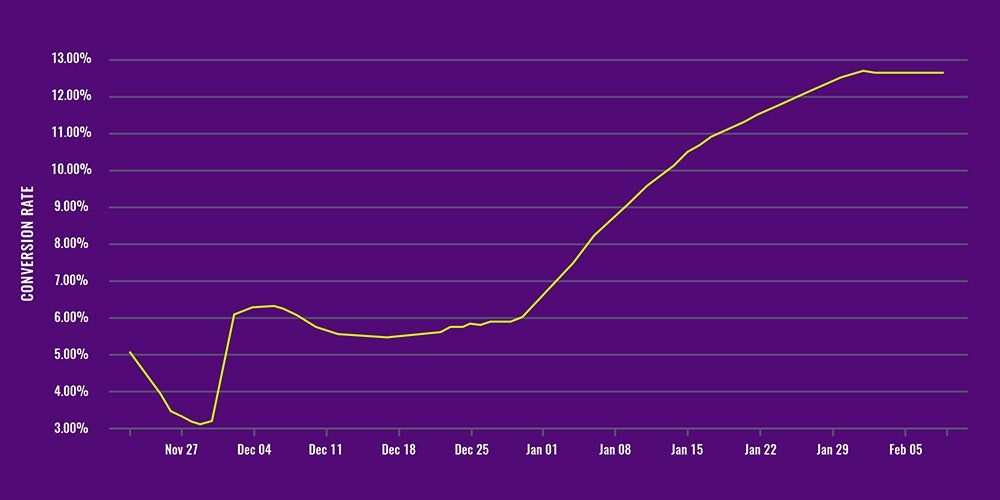

In one test E3 ran for a client’s end-of-year sales event, the amount of traffic gave the team the ability to run multiple tests, iteratively improving things as the campaign went along its three-month course. The results were impressive, ending in a 107% total increase in conversion rates for campaign landing pages.

Be sure to shape your tests accordingly to maximize significance, opportunity, and potential results.

Guiding Your Testing, A.K.A. The “Before You Begin” Part

Now that we’ve covered being confident in the state that our data is in, let’s look at what we should do before we begin testing.

The biggest key here is looking at your data before you test. This will set appropriate baselines for your tests to come, and if you don’t know where or what you should test, it should be your primary driver for discovering that, too.

Use your segmentation to look for patterns of performance. It’s easier to test a smaller segment, though you may run the risk of less statistical significance or more time needed to achieve it. The key is in matching up different segments to find actionable areas of improvement.

For instance, if you’re looking at a landing page for newsletter sign-ups and wondering if you should test the location of a form or other element to improve conversion rates, just what you should test can vary greatly based on how you’re looking at the data. If the page has a low conversion rate, it very well could be that the form is the issue. But…let’s say you segment your data by channel, and then again by mobile vs. desktop.

You could get these two results (just cherry picking a few for the purpose of illustration):

- Mobile traffic to LP = 1% lower conversion rate

- Paid search traffic to LP = 2% lower conversion rate

This insight should challenge your assumption that it could be the landing page that is at fault for the poor conversion rate. Consider the above factors – what if only 10% of your traffic is coming from mobile? That kind of factor should cast doubt that mobile is the big issue. What if 80% of the traffic was coming from paid search ads? A-ha! Now you’ve located the problem…and it’s likely that you need to review the ads and the landing page, in order to determine how well they align.

If they don’t, you could likely have two very valid things to test:

- Adjusting the landing page to better align with the ads; or

- Adjusting the ads to better preview the offer on the landing page.

How you make the decision on what test to conduct to improve the results can then be another study in data – where your budget lies, how easy it is to adjust a landing page versus writing or adjusting ad copy, etc.

There can be several ways to view a problem. Don’t shy away from testing because of this – lean in to your data in order to find the right things to test. Plan first, and only then attack.

Your best tests will tie back to either marketing goals or business initiatives. That doesn’t mean that every test needs to be a direct influence on these goals, but it should consider its relation to them, because that will help set up our post-test results and value.

Consider this test E3 implemented for Airstream. On their website, Airstream features a Build Your Own interactive tool for the Touring Coach product line. The team proposed a test to include a prominent call-to-action (CTA) on the product category page for the Touring Coaches, replacing another CTA that hadn’t performed well.

The test concluded with a 5.6% click-through rate on the new Build Your Own CTA. This resulted in a massive increase of 24,000% in traffic to the tool, which was previously only linked to within the website navigation and in some lead nurturing emails.

The test was a success – it did increase traffic to the Build Your Own tool. But the reason we proposed the test in the first place was in another piece of homework we conducted beforehand…well beforehand, actually. As part of a lead scoring project, the E3 team discovered that of all web leads that became customers (i.e., purchased a Touring Coach), 50%+ of them used the Build Your Own tool to some extent before they became a customer.

Armed with this data, a 24k% increase (which is easy to get when there wasn’t much traffic going to the tool to begin with) and a 5.6% CTR (very impressive, but lacks context) now seem to have a lot of meaning.

Knowing the environment you’re testing in is crucial, and knowing how things link together can help prove why a test that seems far removed from a business goal actually has a very positive impact on it.

Just What Are You Testing, Anyway?

Once you’ve got your data set up, and you’ve done your planning, then…well, this should be the easy part, right? If you did your homework correctly and proposed your test around affecting a specific metric for a specific segment, then the data you’re looking at for your test should be self-evident.

In the above hypothetical example, paid search ads and landing pages, depending on what you decided, you’re either:

- Testing new ads, and looking at click-through rates and paid search-specific traffic conversion rates

- Testing a new landing page, and looking at all traffic channels’ conversion rates

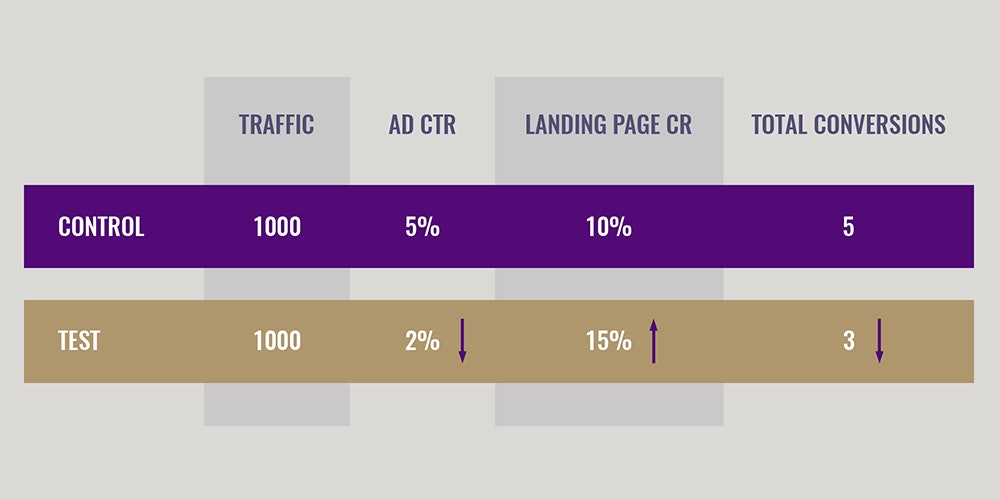

Test 1 has more factors to review, doesn’t it? Why? Because if your conversion rate improves but the click-through rate goes down, the net result may not be worth it. You have to review both and weigh the data for the final net result – total conversions – to make sure your test actually improved the situation. You could have a negative result in either CTR or conversion rate, and the test may still have a positive impact on the end result. Hopefully, both major metrics improve, but you definitely have to watch both.

When testing, pay attention to the entire picture – total conversions – to determine if you should actually implement the change.

And in Test 2, why are we looking at all conversion rates by channel when we are concerned only with paid search traffic? For the same reason – if we improve the paid search channel conversion rate, but the net result is a loss in total conversions, our test succeeded…but the net results show we shouldn’t implement the change because it will affect the end success result of the page. A better idea would be to create a new landing page with the test page for paid search traffic only, and leave the original page as the LP for other channels.

As you’re setting up your tests and reviewing your results, be sure you’re keeping an eye on the following guidelines:

- The Right Stats

- Statistical Relevance

- Gut Calls

The Right Stats

A word of caution: be aware of what effect your test has on other metrics, as discussed above. Some matter more than others. Less “time on page” may not matter on landing pages – if you’re converting a user faster, and conversion rates are going up, this is a good thing.

Make sure you consider which metrics matter, and establish appropriate baselines so you can consider the implications, even outside of your testing platforms.

Paying attention to the wrong stats, or the right stats but in the wrong way, can lead you astray. Plan accordingly.

Statistical Relevance

Getting into all the ins and outs of statistical significance is probably a long blog post in and of itself. Hopefully you recall your hard work in your collegiate Statistics class; if not, brush up.

Thankfully, the testing platforms you should be using provide this kind of analysis as you are setting up your tests. Still, you can always plan where your results need to be beforehand, and there are some online calculators that can certainly help.

Statistical significance is real. You don’t want to base a bunch of changes on false-positive results.

Then again…

Gut Calls

Sometimes, you just don’t have the traffic, or enough traffic in an appropriate amount of time, to get a perfectly statistically relevant result. You should always try to conduct your tests appropriately…but sometimes you just know a thing is right.

Sometimes, as a marketer, you’ve got to trust your gut.

The most important thing to remember is when you go with those gut checks, always follow these two rules:

- Check your work after the change, to make sure it’s still performing accordingly; and

- Trust your gut in areas where you can pivot back quickly.

It’s ok to left fly sometimes…but I wouldn’t stake my career on a silly notion. Be sure when you’re going to go with a “gut call,” you have a plan for how you’re going to consistently monitor the results post-change (and you should ALWAYS still test something – you just might not wait until you have the most-assured results), and you always have a plan for pivoting back if the results change negatively.

You’ve also got to know your segments, and whether an outcome is the result of a seasonal change or some other factor, or if all things have remained equal, and your special new thing just decided to not be good anymore.

Tread lightly, grasshopper!

Post-Test Data and Value-Based Recommendations

You’ve done it. You’ve run a successful test, and your hypothesis tested out – you know a change you can make that could have an impact on your website. How do you get others excited about this, especially if testing is undervalued in your organization or you have silos within your organization that control content, web development, and the like?

The key is in what we discussed before – knowing the environment around the test you’re conducting, and being able to tie in your tests to a marketing or corporate business goal.

No one says no to more sales. No one says no to more leads. Usually, anyway. When you are tying your results to relevant business goals, it makes the prospect of selling in your proposed changes that much easier to approve.

Take for instance this test we ran on Airstream’s website: testing whether a CTA in a static banner would perform better than an image slider with no calls-to-action. We didn’t just measure the new CTA, but the other CTAs on the page that lead to the same action (visiting the brochure download page). The purpose here was not only to give users a link to that brochure page earlier in their product-page experience, but also to increase the overall amount of traffic (via CTA clicks) to the LP from the test page, and hopefully more conversions on the LP itself.

The test itself resulted in a 3.61% increase in click-through rate to the brochure landing page. If you’re a data analyst or a digital marketing junkie, you might be impressed. A 3.5%+ increase, over time, has the potential to produce real, tangible results. So, why not forecast those results?

That’s the path we took in pitching a wholesale change to all landing pages. The 3.61% CTR, while impressive, doesn’t tell the story of the type of impact such a change on the website could have overall. So our team did the digging to find out how much traffic was going to each brochure page from the pages where the proposed change would be rolled out, and we did the math on how many new brochure downloads (considered a sales-qualified lead) the 3.61% change would affect if we made the change on each of these pages.

The numbers don’t lie. The result of the greater, sitewide change was forecasted as an estimated 13.3% increase in brochure download leads for the entire website. And, knowing the SQL-to-purchase ratio, we were able to go a step further, projecting the amount of retail sales and revenue the client could expect.

All new product pages now have the brochure CTA banner in place of the old image slider…and the updates are in progress for the rest of the product pages.

Don’t Settle – Find the Larger Impact

The lesson is that following out the data to its business impact conclusion – and not just settling for a marginal increase in a click or conversion rate, no matter how important and impactful that result can be – is the key to helping your organization fall in love with testing.

Do the work to find the environment your test results can live in. Find the larger impact. Get really good at forecasting.

And use your understanding of business impact to build a culture of getting excited about the little changes and smaller percentage increases that CRO testing can bring. Tying your results to business impact and revenues is a powerful thing.

Like what you’ve read? Get in contact with us today and let’s start a conversation on how E3 can begin to deliver impactful business results for you.